Nota: Este art culo, en espa ol, aqu .

I m not a gamer, although probably I ve played with machines/computers more than most of the girls of my age. My path has been:

handheld machine, Pong and tenis in my uncle s console,

MSX (with that one, in addition to playing, I learnt what was an algorithm and a program, and I started to write small programs in Basic, and I copied and ran the source code of small games and programs to make graphics, which I found in

MSX magazines). My parents considered that arcade machines in bars were like slot machines so they were banned for us (even pinball, only table soccer was saved from them, and only if my father was playing with us).

In the MSX I played

Magical Tree, Galaxians, Arkanoid, Konami s Sports Games, Spanish games from Dinamic, and some arcades like Golden Axe, Xenon, and maybe some more. The next computers (PC AT, later a 286) were not so for gaming; let s say that we played more with the printer (

Harvard Graphics,

Bannermania ). Later, I was interested in other things more than in computer games, and later there were highschool homework, dBase III, and later the University and programming again and more, and it was the end of gaming, computer was for office and Uni homework.

Later, it came the internet and since then, reading and writing and communicating was more interesting for me than playing.

I was not good at playing, and if you are not good, you play less, and you don t get better, so you begin to find other ways to loose your time, or to win it

:)

The new generation

My son is 6 years old now, and I m living with him a second adventure about games. Games have changed a lot, and the family computing try to stay in the libre software side whenever I am the one that can decide, so sometimes some challenges arise.

Android (phone and tablet)

The kid has played games in the phone and tablet with Android since he was a baby. We tried some of the last years popular games. I am not so keen of banning things, but I don t feel comfortable with the popular games for Android (advertisements, nonfree software, addictive elements, massive data recollection and possible surveillance ), so I try to control without being Cruella de Vil . Some techniques I use:

- We agree in the amount of time for using the table, setting a tomato to control the time (thanks Pomodoro in F-Droid)

- I set the airplane mode and shut down internet everytime that I can. I never register account or login to play (if it s mandatory to login in Google or create an account, sorry but we cannot play, or we create a new empty profile).

- I put barriers to installing games (for example, I say that he should uninstall 2 or 3 games first if he wants a new one, or he should explain well why he wants that game and why the ones that were installed became boring suddenly).

- I ve never bought games for Android, and I m not thinking about buying them in the future. I prefer to donate for some libre game project.

- I try to divert attention to other games (not computer games, usually).

- If the equivalent non-computer game exists, we play that one (tic-tac-toe, hungman, battleship )

- I seldom play in the phone/tablet, unless we play together.

On the other side, in my phone there is no Google Play, so we have been able to discover

the section Games of F-Droid.

We have tried (all of them available in F-Droid, emphasis in the ones that he liked best): 2048, AndroFish, Bomber, Coloring for Kids, Core, Dodge, Falling Blocks, Free Fall, Frozen Bubble, HeriSwap (this one in the tablet), Hex, HyperRogue, Meerkat Challenge, Memory, Pixel Dungeon, Robotfindskitten, Slow it!, Tux Memory, Tux Rider, Vector Pinball.

Playing in my phone with CyanogenMod and having downloaded the games from F-Droid provides a relief similar to the one when playing a non-computer game. At least with the games that I have listed above. Maybe it is because they are simpler games, or they make me remember the ones that I played time ago. But it s also because of the peace of mind of knowing that they are libre software, that have been audited by the F-Droid community, that they don t abuse the user.

The same happens with Debian, what takes me to the next part of this blogpost.

Computer games: Debian

The kid has learnt to play with the tablet and the phone before than with the computer, because our computers have no joysticks nor touchscreens. He learnt to use the touchpad before than the mouse, because it s easier and we have no mouse at home. He learnt to use the mouse at school, where they work with

educative games via CD or via web, using Flash :(

So Flash player appears and dissapears from my Debian setup depending on his willness to play with the school games .

Until few time ago, in the computer we played with GCompris, ChildsPlay, and TuxPaint.

When he learnt to use the arrow keys, I installed

Hannah and he liked a lot, specially when we learnt to do hannah -l 900

:)

Later, in ClanTV there were advertisements about some

online computer games about their favorite series, resulting in that they need Flash or a framework called

Unity3D (no, it s not Ubuntu s Unity), and after digging a bit I decided that I was not going to install that #@%! in my Debian, so when he insisted in play those games, I booted the Windows 7 partition in his father s laptop and I installed it there.

Windows is slow and sad in the computer, and those web games with that framework are not very light, so luckily they have not become very interesting.

We have not played in the computer much more, maybe some incursion in

Minetest, what takes me to the next section.

(Not without stating my eternal thanks to the

Games Team in Debian. I think they do a very important work and I think that next year I ll try to get involved in some way, because I know that the future of our family computer games is tied to libre games in Debian).

PlayStation 3 and Minecraft

Some time ago my husband bought a PlayStation 3 for home. The shop had discounts prices and so. He would play together with the kid an so.

The machine came home with some games for free (included in the price), but most of them were classified for +13 or so, so the only two left were Pro Evolution Soccer, and Minecraft.

I decided not to connect the machine to the network. Maybe we are loosing cool things, but I feel safer like that. So, no ethernet cable plugged, no registration in the Sony shop (or whatever its name is).

The controllers are quite complex for the three of us. They are DualShock don t-know-what, and I think there is something (software) that makes the game adaptative to the person playing, because my husband is worse player after the son plays, if they play in turns and use the same controller.

The kid liked Minecraft. I didn t know anything about that game (well, I knew that there was a libre clone called Minetest), so, for learning the basics I had a look at the wiki and searched videos about how to and we began learning. Now, the kid can read a bit so he needs less help, and he has watched a lot of videos about Minecraft, so he is interested in exploring and building.

I had a look at Minetest, and I installed it in Debian. Having to use the keyboard is a disadvantage, and we didn t know how to dig, so it was not much attractive at first sight. I have looked a bit about how to use the PS3 controller in the computer, via USB, and it seems to work, but I suppose I need to write something to match each controller button with the corresponding key and subsequent action in Minetest. This is work, and I am lazy, and the boy seems not very interested in playing with the computer.

Watching the videos we have infered that it s possible to download saved games and worlds to upload them in the videogame console. We have done some tests. I wanted to upload a saved game about an amusement park, but the file was in a folder of name NPEB01899* and even when the PS3 saw it to copy it from USB to the console, later it didn t appear in the list of saved games (our sved games were in folders named BLES01976

). And renaming the folder didn t work, of course. I understood that we had met Sony s restrictions, so I searched for more info. The games are saved using an encryption key and you are not able to use saved games from consoles in other world zone or using a media different than ours (the game can be played using a disc or purchasing it in the digital shop, it seems). Very ugly all of this! I read somewhere that there is certain software (libre software, BTW) that allows to break the encryption and re-encrypt the saved game with the zone and type of media of your console, but it seems the program only works for Windows, and it needs a console ID that we have not, because we didn t register the console in the PlayStation network. All these things look shaky grounds for me, unpleasant stuff, I don t want to spend time on this, maybe I should learn a bit more about Minetest and make it work and interesting and tell Sony go fly a kite. Finally, I found a saved game in the same format as ours (BLES01976), it s not an amusement park but it is a world with interesting places to explore and many things already built, so I ve tried to import it and it worked, so my son will be happy for some time, I suppose.

We have tried Minetest in the tablet too, but the touchscreen is not comfortable for this kind of games.

I feel quite frustrated and angry about this issue of Sony s restrictions on saved games. So I suppose that in the next months I ll try to learn more about Minetest in Debian, game controllers in Debian, and games in Debian in general. So I hope to be able to offer cool stuff to my son, and he becomes more interested in playing in a safe environment which does not abuse the user.

And with this, I finish

Libre games in GNU/Linux, Debian, and info about games in internet

When we have searched info about games in internet, I found that many times you need to go out from the secure environment: webpages with links to downloads that who knows if they contain what they say they contain, advertisements, videoblogs with a language not adequate for kids (or any person that loves their mother language) That s why I believe the path is to go into detail about libre games provided by the distro you use (Debian in my case). Here I bookmark a list of website with info that surely will be useful for me, to read in depth:

We ll see how it goes.

Comments?

You can comment in

this Pump.io thread.

Filed under:

My experiences and opinion Tagged:

Debian,

English,

F-Droid,

Free culture,

Free Software,

Games,

libre software,

Moving into free software

What happened about the reproducible

builds effort for this week:

Presentations

On June 7th, Reiner Herrmann

presented the project at the

Gulaschprogrammiernacht 15 in Karlsruhe, Germany.

Video and audio

recordings

in German are available, and so are the slides in

English.

Toolchain fixes

What happened about the reproducible

builds effort for this week:

Presentations

On June 7th, Reiner Herrmann

presented the project at the

Gulaschprogrammiernacht 15 in Karlsruhe, Germany.

Video and audio

recordings

in German are available, and so are the slides in

English.

Toolchain fixes

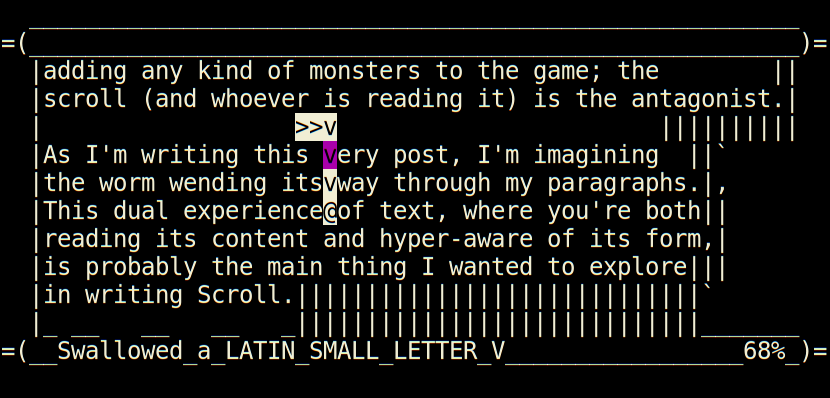

Slow start today; I was pretty exhausted after yesterday and last night's

work. Somehow though, I got past the burn and made major progress today.

All the complex movement of both the player and the scroll is finished now,

and all that remains is to write interesting spells, and a system for

learning spells, and to balance out the game difficulty.

Slow start today; I was pretty exhausted after yesterday and last night's

work. Somehow though, I got past the burn and made major progress today.

All the complex movement of both the player and the scroll is finished now,

and all that remains is to write interesting spells, and a system for

learning spells, and to balance out the game difficulty.

Ever worked at a company (or on a codebase, or whatever) where it seemed

like, no matter what the question was, the answer was written down somewhere

you could easily find it? Most people haven t, sadly, but they do exist,

and I can assure you that it is an absolute pleasure.

On the other hand, practically everyone has experienced completely

undocumented systems and processes, where knowledge is shared by

word-of-mouth, or lost every time someone quits.

Why are there so many more undocumented systems than documented ones out

there, and how can we cause more well-documented systems to exist? The

answer isn t people are lazy , and the solution is simple though not

easy.

Ever worked at a company (or on a codebase, or whatever) where it seemed

like, no matter what the question was, the answer was written down somewhere

you could easily find it? Most people haven t, sadly, but they do exist,

and I can assure you that it is an absolute pleasure.

On the other hand, practically everyone has experienced completely

undocumented systems and processes, where knowledge is shared by

word-of-mouth, or lost every time someone quits.

Why are there so many more undocumented systems than documented ones out

there, and how can we cause more well-documented systems to exist? The

answer isn t people are lazy , and the solution is simple though not

easy.

Here s

Here s  the perl 5.20 transition is over, debconf14 is over, so I should have more

time for RC bugs? yes & no: I fixed some, but only in "our"

(as in: pkg-perl) packages:

the perl 5.20 transition is over, debconf14 is over, so I should have more

time for RC bugs? yes & no: I fixed some, but only in "our"

(as in: pkg-perl) packages:

2013 is nearly all finished up and so I thought I'd spend a little time

writing up what was noteable in the last twelve months. When I did so I

found an unfinished draft from the year before. It would be a shame for

it to go to waste, so here it is.

2012 was an interesting year in many respects with personal

2013 is nearly all finished up and so I thought I'd spend a little time

writing up what was noteable in the last twelve months. When I did so I

found an unfinished draft from the year before. It would be a shame for

it to go to waste, so here it is.

2012 was an interesting year in many respects with personal  This morning Zoe woke up again at around 4am and ended up in bed with me. I

don't even remember why she woke up, and neither does she, but she's assuring

me it won't happen tomorrow morning. We'll see.

As a result of the disturbed night's sleep, we had a bit of a slow start to the

day. Zoe was happy to go off and watch some TV after she woke up for the day,

and that let me have a bit more of a doze before I got up, which made things

vaguely better for me.

This morning Zoe woke up again at around 4am and ended up in bed with me. I

don't even remember why she woke up, and neither does she, but she's assuring

me it won't happen tomorrow morning. We'll see.

As a result of the disturbed night's sleep, we had a bit of a slow start to the

day. Zoe was happy to go off and watch some TV after she woke up for the day,

and that let me have a bit more of a doze before I got up, which made things

vaguely better for me.